Appreciation of OpenAI's Naming Art

Categories:

All OpenAI models are documented here: https://platform.openai.com/docs/models/.

Without delving too far into the past, let’s start with the GPT-4 series and contemporary models. Here is my compiled table:

| Name | Description | Model Card Link |

|---|---|---|

ChatGPT-4o | GPT-4o model used in ChatGPT | https://platform.openai.com/docs/models/chatgpt-4o-latest |

GPT-4 | An older high-intelligence GPT model | https://platform.openai.com/docs/models/gpt-4 |

GPT-4 Turbo | An older high-intelligence GPT model | https://platform.openai.com/docs/models/gpt-4-turbo |

GPT-4 Turbo Preview | Deprecated An older fast GPT model | https://platform.openai.com/docs/models/gpt-4-turbo-preview |

GPT-4.1 | Smartest non-reasoning model | https://platform.openai.com/docs/models/gpt-4.1 |

GPT-4.1 mini | Smaller, faster version of GPT-4.1 | https://platform.openai.com/docs/models/gpt-4.1-mini |

GPT-4.1 nano | Fastest, most cost-efficient version of GPT-4.1 | https://platform.openai.com/docs/models/gpt-4.1-nano |

GPT-4.5 Preview | Deprecated large model. | https://platform.openai.com/docs/models/gpt-4.5-preview |

GPT-4o | Fast, intelligent, flexible GPT model | https://platform.openai.com/docs/models/gpt-4o |

GPT-4o Audio | GPT-4o models capable of audio inputs and outputs | https://platform.openai.com/docs/models/gpt-4o-audio-preview |

GPT-4o mini | Fast, affordable small model for focused tasks | https://platform.openai.com/docs/models/gpt-4o-mini |

GPT-4o mini Audio | Smaller model capable of audio inputs and outputs | https://platform.openai.com/docs/models/gpt-4o-mini-audio-preview |

GPT-4o mini Realtime | Smaller realtime model for text and audio inputs and outputs | https://platform.openai.com/docs/models/gpt-4o-mini-realtime-preview |

GPT-4o mini Search Preview | Fast, affordable small model for web search | https://platform.openai.com/docs/models/gpt-4o-mini-search-preview |

GPT-4o mini Transcribe | Speech-to-text model powered by GPT-4o mini | https://platform.openai.com/docs/models/gpt-4o-mini-transcribe |

GPT-4o mini TTS | Text-to-speech model powered by GPT-4o mini | https://platform.openai.com/docs/models/gpt-4o-mini-tts |

GPT-4o Realtime | Model capable of realtime text and audio inputs and outputs | https://platform.openai.com/docs/models/gpt-4o-realtime-preview |

GPT-4o Search Preview | GPT model for web search in Chat Completions | https://platform.openai.com/docs/models/gpt-4o-search-preview |

GPT-4o Transcribe | Speech-to-text model powered by GPT-4o | https://platform.openai.com/docs/models/gpt-4o-transcribe |

GPT-4o Transcribe Diarize | Transcription model that identifies who’s speaking when | https://platform.openai.com/docs/models/gpt-4o-transcribe-diarize |

o1 | Previous full o-series reasoning model | https://platform.openai.com/docs/models/o1 |

o1-mini | A small model alternative to o1 | https://platform.openai.com/docs/models/o1-mini |

o1-pro | Version of o1 with more compute for better responses | https://platform.openai.com/docs/models/o1-pro |

o3 | Reasoning model for complex tasks, succeeded by GPT-5 | https://platform.openai.com/docs/models/o3 |

o3-pro | Version of o3 with more compute for better responses | https://platform.openai.com/docs/models/o3-pro |

o3-mini | A small model alternative to o3 | https://platform.openai.com/docs/models/o3-mini |

o4-mini | Fast, cost-efficient reasoning model, succeeded by GPT-5 mini | https://platform.openai.com/docs/models/o4-mini |

There are dedicated models for scenarios like audio (Audio), real-time (Realtime), search (Search), speech-to-text (Transcribe), and text-to-speech (TTS).

For the same scenario, such as audio (Audio), two models are provided: GPT-4o Audio and GPT-4o mini Audio. Users need to experiment to determine which meets their quality expectations.

In the speech-to-text (Transcribe) scenario, three models are available: GPT-4o Transcribe, GPT-4o mini Transcribe, and GPT-4o Transcribe Diarize.ChatGPT-4o is a special version of GPT-4o used exclusively in ChatGPT and unavailable for other scenarios.

It’s intuitive that GPT-4.1 is better than GPT-4, but comparing GPT-4o (4-O) with GPT-4.1 or GPT-4 is less straightforward. Chronologically, GPT-4 came first, followed by GPT-4o, and then GPT-4.1. Still, it’s unclear whether GPT-4o or GPT-4.1 performs better.

Here, I’ll define “better” as having higher accuracy in mathematical and reasoning tasks, excluding speed from the definition. Previously, OpenAI may have valued speed and intelligence equally, leading to confusing model comparisons. Fortunately, in the GPT-5 era, OpenAI began prioritizing intelligence over speed, eliminating ambiguity in model comparisons. A fast but incorrect answer is a waste of time and meaningless.

During the GPT-4 era, it took me nearly half a year to discover that o3 was the best model at the time. Once confirmed, I exclusively used it for debugging. Its description reads: “Reasoning model for complex tasks, succeeded by GPT-5.” OpenAI directly chose the o3 path to develop the next-generation model GPT-5.

I believe many users, like me, experimented with these models before settling on o3, which OpenAI could observe from backend usage data.

There’s also an isolated model o4-mini without a corresponding o4 model. The successor to o3 is GPT-5, while o3-mini has no successor. The successor to o4-mini is GPT-5 mini.

The transition to the GPT-5 era marked a watershed moment for OpenAI, as the company began prioritizing intelligence over speed. Abandoning so many specialized models was undoubtedly a difficult decision for a large enterprise, but I believe it was the right one.

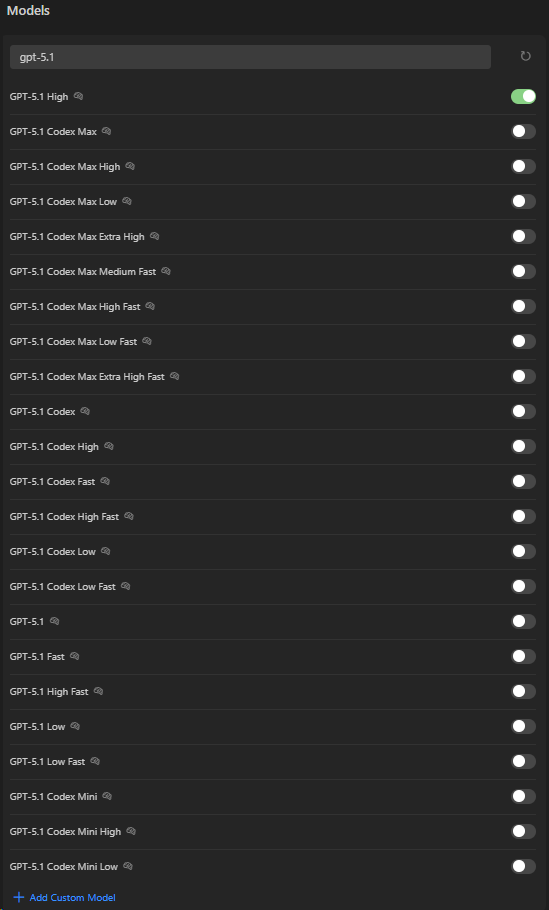

This is the GPT-5 series model selection interface in Cursor:

The GPT-5 series models have more systematic and intuitive naming conventions.

The new naming rules: Base Version + Specialization + Scale + Reasoning Strength + Compute Resources

Example: GPT-5.1-Codex-Max-High-Fast

Although the combinations still result in numerous models, at least they’re no longer as bewildering as the GPT-4 series.