Hands-on Experience with GitHub Copilot Agent Mode

Categories:

This post summarizes how to use GitHub Copilot in Agent mode, sharing practical experience.

Prerequisites

- Use VSCode Insider;

- Install the GitHub Copilot (Preview) extension;

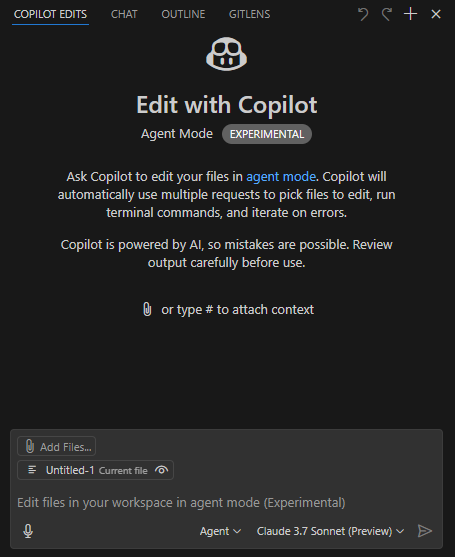

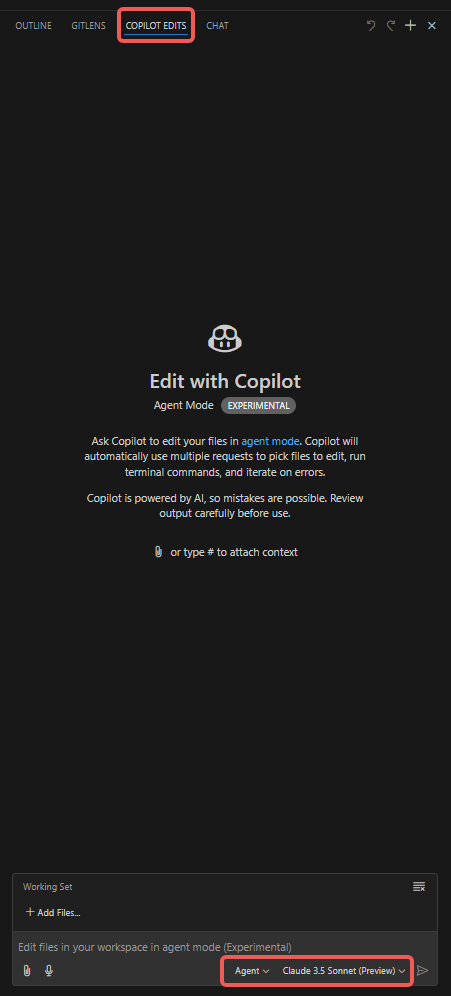

- Select the Claude 3.7 Sonnet (Preview) model, which excels at code generation; other models may be superior in speed, multi-modal (e.g. image recognition) or reasoning capabilities;

- Choose Agent as the working style.

Step-by-step

- Open the “Copilot Edits” tab;

- Attach items such as “Codebase”, “Get Errors”, “Terminal Last Commands”;

- Add files to the “Working Set”; it defaults to the currently opened file, but you can manually choose others (e.g. “Open Editors”);

- Add “Instructions”; type the prompt that you especially want the Copilot Agent to notice;

- Click “Send” and watch the Agent perform.

Additional notes

- VSCode language extensions’ lint features produce Errors or Warnings; the Agent can automatically fix the code based on those hints.

- As the conversation continues, the modifications may drift from your intent. Keep every session tightly scoped to a single clear topic; finish the short-term goal and start a new task rather than letting the session grow too long.

- Under “Working Set”, the “Add Files” menu provides a “Related Files” option which recommends related sources.

- Watch the line count of individual files to avoid burning tokens.

- Generate the baseline first, then tests. This allows the Agent to debug and self-verify with test results.

- To constrain modifications, you can add the following to settings.json; it only alters files in the designated directory (for reference):

"github.copilot.chat.codeGeneration.instructions": [

{

"text": "Only modify files under ./script/; leave others unchanged."

},

{

"text": "If the target file exceeds 1,000 lines, place new functions in a new file and import them; if the change would make the file too long you may disregard this rule temporarily."

}

],

"github.copilot.chat.testGeneration.instructions": [

{

"text": "Generate test cases in the existing unit-test files."

},

{

"text": "After any code changes, always run the tests to verify correctness."

}

],

Common issues

Desired business logic code is not produced

Break large tasks into small ones; one session per micro-task. A bloated context makes the model’s attention scatter.

The right amount of context for a single chat is tricky—too little or too much both lead to misunderstanding.

DeepSeek’s model avoids the attention problem, but it’s available only in Cursor via DeepSeek API; its effectiveness is unknown.

Slow response

Understand the token mechanism: input tokens are cheap and fast, output tokens are expensive and slow.

If a single file is huge but only three lines need change, the extra context and output still consume many tokens and time.

Therefore keep files compact; avoid massive files and huge functions. Split large ones early and reference them.

Domain understanding problems

Understanding relies on comments and test files. Supplement code with sufficient comments and test cases so Copilot Agent can grasp the business.

The code and comments produced by the Agent itself often act as a quick sanity check—read them to confirm expectations.

Extensive debugging after large code blocks

Generate baseline code for the feature, then its tests, then adjust the logic. The Agent can debug autonomously and self-validate.

It will ask permission to run tests, read the terminal output, determine correctness, and iterate on failures until tests pass.

In other words, your greatest need is good domain understanding; actual manual writing isn’t excessive. Only when both the test code and the business code are wrong—so the Agent neither writes correct tests nor correct logic—will prolonged debugging occur.

Takeaways

Understand the token cost model: input context is cheap, output code is costly; unchanged lines in the file may still count toward output—evidence is the slow streaming of unmodified code.

Keep individual files small if possible. You will clearly notice faster or slower interactions depending on file size as you use the Agent.